L’article Consommer et produire : l’essence de la prison numérique est apparu en premier sur CONTRETEMPS.

Après deux ans passés à diversifier ses champs d’action, La Quadrature du Net s’attaque désormais à un nouveau front : la lutte contre le déferlement de l’intelligence artificielle (IA) dans tous les pans de la société. Pour continuer à faire vivre la critique d’une politique numérique autoritaire et écocide, La Quadrature a plus que jamais besoin de votre soutien en 2025.

Depuis plusieurs années, en lien avec d’autres collectifs en France et en Europe, nous documentons les conséquences sectorielles très concrètes de l’adoption croissante de l’intelligence artificielle : à travers les campagnes Technopolice et France Contrôle, ou encore plus récemment avec des enquêtes pour documenter l’impact environnemental des data centers qui accompagnent la croissance exponentielle des capacités de stockage et de calcul.

Une triple accumulation capitaliste

Ces derniers mois, suite à la hype soudaine de l’intelligence artificielle générative et des produits comme ChatGPT, nous assistons à une nouvelle accélération du processus d’informatisation, sous l’égide des grandes entreprises et des États complices. Or, cette accélération est la conséquence directe de tout ce qui pose déjà problème dans la trajectoire numérique dominante. D’abord, une formidable accumulation de données depuis de nombreuses années par les grandes multinationales de la tech comme Google, Microsoft, Meta ou Amazon, qui nous surveillent pour mieux prédire nos comportements, et qui sont désormais capables d’indexer de gigantesques corpus de textes, de sons et d’images en s’appropriant le bien commun qu’est le Web.

Pour collecter, stocker et traiter toutes ces données, il faut une prodigieuse accumulation de ressources. Celle-ci transparaît via les capitaux, d’abord : l’essor de la tech, dopée au capitalisme de surveillance, a su s’attirer les faveurs des marchés financiers et profiter de politiques publiques accommodantes. Grâce à ces capitaux, ces entreprises peuvent financer une croissance quasi-exponentielle de la capacité de stockage et de calcul de données nécessaire pour entraîner et faire tourner leurs modèles d’IA, en investissant dans des puces graphiques (GPU), des câbles sous-marins et des data centers. Ces composants et infrastructures nécessitant à leur tour des quantités immenses de terres et métaux rares, d’eau et d’électricité.

Lorsqu’on a en tête cette triple accumulation — de données, de capitaux, de ressources —, on comprend pourquoi l’IA est le produit de tout ce qui pose déjà problème dans l’économie du numérique, et en quoi elle aggrave la facture. Or, le mythe marketing (et médiatique) de l’intelligence artificielle occulte délibérément les enjeux et les limites intrinsèques à ces systèmes, y compris pour les plus performants d’entre eux (biais, hallucinations, gabegie des moyens nécessaires à leur fonctionnement).

L’exploitation au carré

L’emballement politico-médiatique autour de l’IA fait l’impasse sur les effets concrets de ces systèmes. Car bien loin de résoudre les problèmes actuels de l’humanité grâce à une prétendue rationalité supérieure qui émergerait de ses calculs, « l’IA » dans ses usages concrets amplifie toutes les injustices existantes. Dans le champ économique, elle se traduit par l’exploitation massive et brutale des centaines de milliers de « travailleur·euses de la donnée » chargées d’affiner les modèles et de valider leurs résultats. En aval, dans les organisations au sein desquelles ces systèmes sont déployés, elle induit une nouvelle prise de pouvoir des managers sur les travailleur·euses afin d’augmenter la rentabilité des entreprises.

Certes, il existe des travailleur·euses relativement privilégié·es du secteur tertiaire ou encore des « classes créatives » qui y voient aujourd’hui une opportunité inespérée de « gagner du temps », dans une société malade de la course à la productivité. C’est une nouvelle « dictature de la commodité » : à l’échelle individuelle, tout nous incite à être les complices de ces logiques de dépossession collective. Plutôt que de libérer les salarié⋅es, il y a fort à parier que l’automatisation du travail induite par le recours croissant à l’IA contribuera, en réalité, à accélérer davantage les cadences de travail. Comme ce fut le cas lors des précédentes vagues d’informatisation, il est probable que l’IA s’accompagne également d’une dépossession des savoirs et d’une déqualification des métiers qu’elle touche, tout en contribuant à la réduction des salaires, à la dégradation des conditions de travail et à des destructions massives d’emploi qualifiés — aggravant du même coup la précarité de pans entiers de la population.

Dans le secteur public aussi, l’IA accentue l’automatisation et l’austérité qui frappent déjà les services publics, avec des conséquences délétères sur le lien social et les inégalités. L’éducation nationale, où sont testées depuis septembre 2024 et sans aucune évaluation préalable, les IA « pédagogiques » d’une startup fondée par un ancien de Microsoft, apparaît comme un terrain particulièrement sensible où ces évolutions sont d’ores et déjà à l’œuvre.

Défaire le mythe

Pour soutenir le mythe de l’« intelligence artificielle » et minimiser ses dangers, un exemple emblématique est systématiquement mis en exergue : elle serait capable d’interpréter les images médicales mieux qu’un œil humain, et de détecter les cancers plus vite et plus tôt qu’un médecin. Elle pourrait même lire des résultats d’analyses pour préconiser le meilleur traitement, grâce à une mémoire encyclopédique des cas existants et de leurs spécificités. Pour l’heure, ces outils sont en développement et ne viennent qu’en appoint du savoir des médecins, que ce soit dans la lecture des images ou l’aide au traitement.

Quelle que soit leur efficacité réelle, les cas d’usage « médicaux » agissent dans la mythologie de l’IA comme un moment héroïque et isolé qui cache en réalité un tout autre programme de société. Une stratégie de la mystification que l’on retrouve aussi dans d’autres domaines. Ainsi, pour justifier la surveillance des communications, les gouvernements brandissent depuis plus de vingt ans la nécessité de lutter contre la pédocriminalité, ou celle de lutter contre le terrorisme. Dans la mythologie de la vidéosurveillance algorithmique policière (VSA), c’est l’exemple de la petite fille perdue dans la ville, et retrouvée en quelques minutes grâce au caméras et à la reconnaissance faciale, qui est systématiquement utilisé pour convaincre du bien fondé d’une vidéosurveillance totale de nos rues.

Il faut écarter le paravent de l’exemple vertueux pour montrer les usages inavouables qu’on a préféré cacher derrière, au prix de la réduction pernicieuse des libertés et des droits. Il faut se rendre compte qu’en tant que paradigme industriel, l’IA décuple les méfaits et la violence du capitalisme contemporain et aggrave les exploitations qui nous asservissent. Qu’elle démultiplie la violence d’État, ainsi que l’illustre la place croissante accordée à ces dispositifs au sein des appareils militaires, comme à Gaza où l’armée israélienne l’utilise pour accélérer la désignation des cibles de ses bombardements.

Tracer des alternatives

Au lieu de lutter contre l’IA et ses méfaits, les politiques publiques menées aujourd’hui en France et en Europe semblent essentiellement conçues pour conforter l’hégémonie de la tech. C’est notamment le cas du AI Act ou « règlement IA », pourtant présenté à l’envi comme un rempart face aux dangers de « dérives » alors qu’il cherche à déréguler un marché en plein essor. C’est qu’à l’ère de la Startup Nation et des louanges absurdes à l’innovation, l’IA apparaît aux yeux de la plupart des dirigeants comme une planche de salut, un Graal qui serait seul capable de sauver l’Europe d’un naufrage économique.

Encore et toujours, c’est l’argument de la compétition géopolitique qui est mobilisé pour faire taire les critiques : que ce soit dans le rapport du Comité gouvernemental dédié à l’IA générative ou dans celui de Mario Draghi, il s’agit d’inonder les multinationales et les start-ups de capitaux, pour permettre à l’Europe de rester dans la course face aux États-Unis et à la Chine. Ou comment soigner le mal par le mal, en reproduisant les erreurs déjà commises depuis plus de quinze ans : toujours plus d’« argent magique » pour la tech, tandis que les services publics et autres communs sont astreints à l’austérité. C’est le choix d’un recul des protections apportées aux droits et libertés pour mieux faire proliférer l’IA partout dans la société.

Ces politiques sont absurdes, puisque tout laisse à penser que le retard industriel de l’Europe en matière d’IA ne pourra pas être rattrapé, et que cette course est donc perdue d’avance. Surtout, ces politiques sont dangereuses dans la mesure où, loin de la technologie salvatrice souvent mise en exergue, l’IA accélère au contraire le désastre écologique, amplifie les discriminations et accroît de nombreuses formes de dominations. Le paradigme actuel nous enferme non seulement dans une fuite en avant insoutenable, mais il nous empêche aussi d’inventer une trajectoire politique émancipatrice en phase avec les limites planétaires.

L’IA a beau être présentée comme inéluctable, nous ne voulons pas nous résigner. Face au consensus mou qui conforte un système capitaliste dévastateur, nous voulons contribuer à organiser la résistance et à esquisser des alternatives. Mais pour continuer notre action en 2025, nous avons besoin de votre soutien. Alors si vous le pouvez, rendez-vous sur don.laquadrature.net !

Quand on entend parler d’intelligence artificielle, c’est l’histoire d’un mythe moderne qui nous est racontée. Celui d’une IA miraculeuse qui doit sauver le monde, ou d’une l’IA douée de volonté qui voudrait le détruire. Pourtant derrière cette « IA » fantasmée se trouve une réalité matérielle avec de vraies conséquences. Cette thématique sera centrale dans notre travail en 2025, voilà pourquoi nous commençons par déconstruire ces fantasmes : non, ce n’est pas de l’IA, c’est l’exploitation de la nature, l’exploitation des humains, et c’est l’ordonnancement de nos vies à des fins autoritaires ciblant toujours les personnes les plus vulnérables.

Pour faire vivre notre combat contre le numérique autoritaire, capitaliste et écocide, et poursuivre notre travail de proposition positive pour un numérique libre, émancipateur et fédérateur, nous avons besoin de votre soutien !

C’est pas de l’IA, c’est de l’exploitation dernier cri

L’IA est le prolongement direct des logiques d’exploitation capitalistes. Si cette technologie a pu émerger, c’est du fait de l’accaparement de nombreuses ressources par le secteur de la tech : d’abord nos données personnelles, puis d’immenses capitaux financiers et enfin les ressources naturelles, extraites en reposant sur le colonialisme ainsi que sur le travail humain nécessaires à l’entraînement des modèles d’IA. Une fois déployée dans le monde professionnel et le secteur public, l’IA aggrave la précarisation et la déqualification des personnes au nom d’une course effrénée à la productivité.

C’est pas de l’IA, c’est une immense infrastructure écocide

L’essor de l’IA repose sur l’extraction de minerais rares afin de fabriquer les puces électroniques indispensables à ses calculs. Elle conduit aussi à la multiplication des data centers par les multinationales de la tech, des équipements gigantesques et coûteux en énergie qu’il faut en permanence refroidir. Partout sur la planète, des communautés humaines se voient ainsi spoliées de leur eau, tandis qu’on rallume des centrales à charbons pour produire l’électricité nécessaire à leur fonctionnement. Derrière les discours de greenwashing des entreprises, les infrastructures matérielles de l’IA génèrent une augmentation effrayante de leurs émissions de gaz à effet de serre.

C’est pas de l’IA, c’est l’automatisation de l’État policier

Que ce soit au travers de la police prédictive ou de la vidéosurveillance algorithmique, l’IA amplifie la brutalité policière et renforce les discriminations structurelles. Derrière un vernis prétendument scientifique, ces technologies arment la répression des classes populaires et des militant·es politiques. Elles rendent possible une surveillance systématique de l’espace public urbain et, ce faisant, participent à l’avènement d’un monde où le moindre écart à la norme peut être détecté puis puni par l’État.

C’est pas de l’IA, c’est la chasse aux pauvres informatisée

Sous couvert de « rationalisation », l’IA envahit les administrations sociales à travers le développement d’algorithmes auto-apprenants visant à détecter de potentiels fraudeurs. Allocations Familiales, Assurance Maladie, Assurance Vieillesse, Mutualité Sociale Agricole : ces systèmes sont aujourd’hui déployés dans les principales administrations de l’« État providence ». Associant un « score de suspicion » à chacune d’entre nous pour sélectionner les personnes à contrôler, nos recherches montrent qu’ils ciblent délibérément les personnes les plus précaires .

C’est pas de l’IA, c’est la mise aux enchères de notre temps de cerveau disponible

L’accaparement de nos données personnelles permet de nourrir les IA de profilage publicitaire, qui associent en temps réel des publicités à nos « profils » vendus aux plus offrants. Cette marchandisation de notre attention a aussi pour effet de façonner les réseaux sociaux centralisés, régulés par des IA de recommandation de contenus qui les transforment en lieux de radicalisation binaire des camps politiques. Enfin, pour développer des produits comme ChatGPT, les entreprises du secteur doivent amasser d’immenses corpus de textes, de sons et d’images, s’appropriant pour ce faire le bien commun qu’est le Web.

Pourquoi nous faire un don cette année ?

Pour boucler le budget de l’année qui vient, nous souhaitons récolter 260 000 € de dons, en comptant les dons mensuels déjà existants, et tous les nouveaux dons mensuels ou ponctuels.

À quoi servent concrètement vos dons ?

L’association a une fantastique équipe de membres bénévoles, mais elle a aussi besoin d’une équipe salariée.

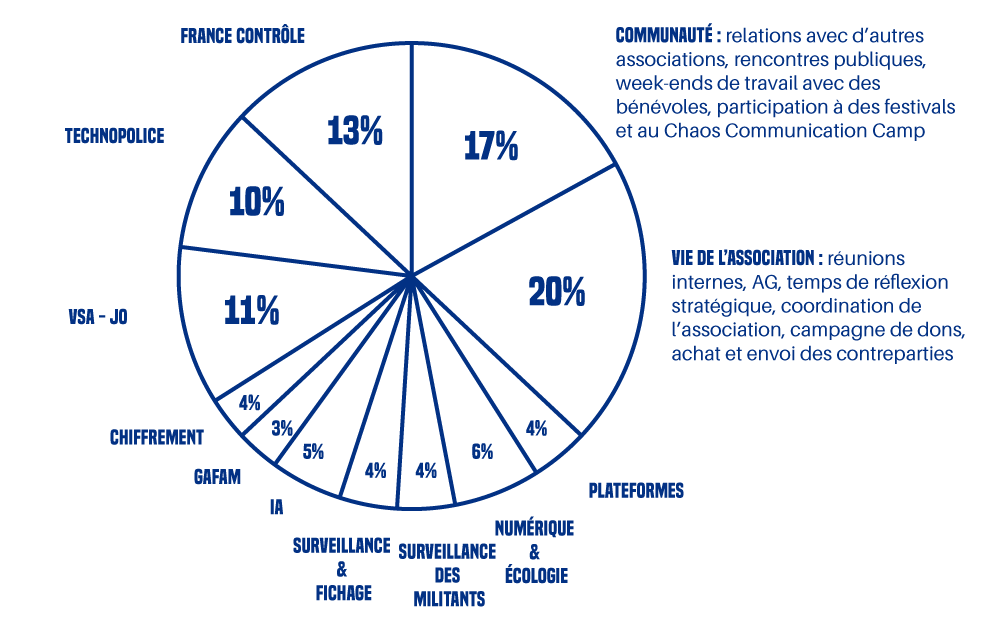

Les dons recueillis servent principalement à payer les salaires des permanentes de l’association (75 % des dépenses). Les autres frais à couvrir sont le loyer et l’entretien du local, les déplacements en France et à l’étranger (en train uniquement), les frais liés aux campagnes et aux évènements ainsi que les divers frais matériels propres à toute activité militante (affiches, stickers, papier, imprimante, t-shirts, etc.).

Pour vous donner une idée, quand on ventile nos dépenses de 2024 (salaires inclus) sur nos campagnes, en fonction du temps passé par chacun·e sur les sujets de nos luttes, ça ressemble à ça :

Quelles sont nos sources de financement ?

L’association ne touche aucun argent public, mais reçoit des soutiens, à hauteur de 40 % de son budget, de la part de diverses fondations philanthropiques : la Fondation pour le progrès de l’Homme, la fondation Un monde par tous, Open Society Foundations, la Limelight Foundation et le Digital Freedom Fund.

Le reste de notre budget provient de vos dons. Alors si vous le pouvez, aidez-nous !

Attention, comme nous l’expliquons dans la FAQ de notre site, les dons qui sont faits à La Quadrature ne sont pas déductibles des impôts, les services fiscaux nous ayant refusé cette possibilité à deux reprises.

Comment donner ?

Vous pouvez faire un don par CB, par chèque, ou par virement bancaire.

Et si vous pouvez faire un don mensuel — même un tout petit ! — n’hésitez pas, ce sont nos préférés : en nous assurant des rentrées d’argent tout au long de l’année, ils nous permettent de travailler avec plus de confiance dans la pérennité de nos actions.

En plus, le cumul de vos dons vous donne droit à des contreparties (sac, t-shirt, sweat). Attention, l’envoi n’est pas automatique, il faut vous connecter et faire la demande sur votre page personnelle de donateur/donatrice. Et si les contreparties tardent un peu à arriver, ce qui n’est pas rare, c’est parce qu’on est débordé·es, ou qu’on attend le réassort dans certaines tailles, et aussi parce qu’on fait tout ça nous-mêmes avec nos petites mains. Mais elles finissent toujours par arriver !

Merci encore pour votre générosité, et merci beaucoup pour votre patience <3 Faire un don

I think I’m probably going to lose quite a lot of money in the next year or two. It’s partly AI’s fault, but not mostly. Nonetheless I’m mostly going to write about AI, because it intersects the technosphere, where I’ve lived for decades.

I’ve given up having a regular job. The family still has income but mostly we’re harvesting our savings, built up over decades in a well-paid profession. Which means that we are, willy-nilly, investors. And thus aware of the fever-dream finance landscape that is InvestorWorld.

The Larger Bubble

Put in the simplest way: Things have been too good for too long in InvestorWorld: low interest, high profits, the unending rocket rise of the Big-Tech sector, now with AI afterburners. Wile E. Coyote hasn’t actually run off the edge of the cliff yet, but there are just way more ways for things to go wrong than right in the immediate future.

If you want to dive a little deeper, The Economist has a sharp (but paywalled) take in Stockmarkets are booming. But the good times are unlikely to last. Their argument is that profits are overvalued by investors because, in recent years, they’ve always gone up. Mr Market ignores the fact that that at least some of those gleaming profits are artifacts of tax-slashing by right-wing governments.

That piece considers the observation that “Many investors hope that AI will ride to the rescue” and is politely skeptical.

Popping the bubble

My own feelings aren’t polite; closer to Yep, you are living in a Nvidia-led tech bubble by Brian Sozzi over at Yahoo! Finance.

Sozzi is fair, pointing out that this bubble feels different from the cannabis and crypto crazes; among other things, chipmakers and cloud providers are reporting big high-margin revenues for real actual products. But he hammers the central point: What we’re seeing is FOMO-driven dumb money thrown at technology where the people throwing the money have no hope of understanding. Just because everybody else is and because the GPTs and image generators have cool demos. Sozzi has the numbers, looking at valuations through standard old-as-dirt filters and shaking his head at what he sees.

What’s going to happen, I’m pretty sure, is that AI/ML will, inevitably, disappoint; in the financial sense I mean, probably doing some useful things, maybe even a lot, but not generating the kind of profit explosions that you’d need to justify the bubble. So it’ll pop, and my bet it is takes a bunch of the finance world with it. As bad as 2008? Nobody knows, but it wouldn’t surprise me.

The rest of this piece considers the issues facing AI/ML, with the goal of showing why I see it as a bubble-inflator and eventual bubble-popper.

First, a disclosure: I speak as an educated amateur. I’ve never gone much below the surface of the technology, never constructed a model or built model-processing software, or looked closely at the math. But I think the discussion below still works.

What’s good about AI/ML

Spoiler: I’m not the kind of burn-it-with-fire skeptic that I became around anything blockchain-flavored. It is clear that generative models manage to embed significant parts of the structure of language, of code, of pictures, of many things where that has previously not been the case. The understanding is sufficient to reliably accomplish the objective: Produce plausible output.

I’ve read enough Chomsky to believe that facility with language is a defining characteristic of intelligence. More than that, a necessary but not sufficient ingredient. I dunno if anyone will build an AGI in my lifetime, but I am confident that the task would remain beyond reach without the functions offered by today’s generative models.

Furthermore, I’m super impressed by something nobody else seems to talk about: Prompt parsing. Obviously, prompts are processed into a representation that reliably sends the model-traversal logic down substantially the right paths. The LLMbots of this world may regularly be crazy and/or just wrong, but they do consistently if not correctly address the substance of the prompt. There is seriously good natural-language engineering going on here that AI’s critics aren’t paying enough attention to.

So I have no patience with those who scoff at today’s technology, accusing it being a glorified Markov chain. Like the song says: Something’s happening here! (What it is ain’t exactly clear.)

It helps that in the late teens I saw neural-net pattern-matching at work on real-world problems from close up and developed serious respect for what that technology can do; An example is EC2’s Predictive Auto Scaling (and gosh, it looks like the competition has it too).

And recently, Adobe Lightroom has shipped a pretty awesome “Select Sky” feature. It makes my M2 MacBook Pro think hard for a second or two, but I rarely see it miss even an isolated scrap of sky off in the corner of the frame. It allows me, in a picture like this, to make the sky’s brightness echo the water’s.

And of course I’ve heard about success stories in radiology and other disciplines.

Thus, please don’t call me an “AI skeptic” or some such. There is a there there.

But…

Given that, why do I still think that the flood of money being thrown at this tech is dumb, and that most of it will be lost? Partly just because of that flood. When financial decision makers throw loads of money at things they don’t understand, lots of it is always lost.

In the Venture-Capital business, that’s an understood part of the business cycle; they’re looking to balance that out with a small number of 10x startup wins. But when big old insurance companies and airlines and so on are piling in and releasing effusive statements about building the company around some new tech voodoo, the outcome, in my experience, is very rarely good.

But let’s be specific.

Meaning

As I said above, I think the human mind has a large and important language-processing system. But that’s not all. It’s also a (slow, poorly-understood) computer, with access to a medium-large database of facts and recollections, an ultra-slow numeric processor, and a facilities for estimation, prediction, speculation, and invention. Let’s group all this stuff together and call it “meaning”.

Have a look at Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data by Emily Bender and Alexander Koller (July 2000). I don’t agree with all of it, and it addresses an earlier generation of generative models, but it’s very thought-provoking. It postulates the “Octopus Test”, a good variation on the bad old Chinese-Room analogy. It talks usefully about how human language acquisition works. A couple of quotes: “It is instructive to look at the past to appreciate this question. Computational linguistics has gone through many fashion cycles over the course of its history” and “In this paper, we have argued that in contrast to some current hype, meaning cannot be learned from form alone.”

I’m not saying these problems can’t be solved. Software systems can be equipped with databases of facts, and who knows, perhaps some day estimation, prediction, speculation, and invention. But it’s not going to be easy.

Difficulty

I think there’s a useful analogy between the stories AI and of self-driving cars. As I write this, Apple has apparently decided that generative AI is easier than shipping an autonomous car. I’m particularly sensitive to this analogy because back around 2010, as the first self-driving prototypes were coming into view, I predicted, loudly and in public, that this technology was about to become ubiquitous and turn the economy inside out. Ouch.

There’s a pattern: The technologies that really do change the world tend to have strings of successes, producing obvious benefits even in their earliest forms, to the extent that geeks load them in the back floor of organizations just to get shit done. As they say, “The CIO is the last to know.”

Contrast cryptocurrencies and blockchains, which limped along from year to year, always promising a brilliant future, never doing anything useful. As to the usefulness of self-driving technology, I still think it’s gonna get there, but it’s surrounded by a cloud of litigation.

Anyhow, anybody who thinks that it’ll be easy to teach “meaning” (as I described it above) to today’s generative AI is a fool, and you shouldn’t give them your money.

Money and carbon

Another big problem we’re not talking about enough is the cost of generative AI. Nature offers Generative AI’s environmental costs are soaring — and mostly secret. In a Mastodon thread, @Quixoticgeek@social.v.st says We need to talk about data centres, and includes a few hard and sobering numbers.

Short form: This shit is expensive, in dollars and in carbon load. Nvidia pulled in $60.9 billion in 2023, up 126% from the previous year, and is heading for a $100B/year run rate, while reporting a 75% margin.

Another thing these articles don’t mention is that building, deploying, and running generative-AI systems requires significant effort from a small group of people who now apparently constitute the world’s highest-paid cadre of engineers. And good luck trying to hire one if you’re a mainstream company where IT is a cost center.

All this means that for the technology to succeed, it not only has to do something useful, but people and businesses will have to be ready to pay a significantly high price for that something.

I’m not saying that there’s nothing that qualifies, but I am betting that it’s not in ad-supported territory.

Also, it’s going to have to deal with pushback from unreasonable climate-change resisters like, for example, me.

Anyhow…

I kind of flipped out, and was motivated to finish this blog piece, when I saw this: “UK government wants to use AI to cut civil service jobs: Yes, you read that right.” The idea — to have citizen input processed and responded to by an LLM — is hideously toxic and broken; and usefully reveals the kind of thinking that makes morally crippled leaders all across our system love this technology.

The road ahead looks bumpy from where I sit. And when the business community wakes up and realizes that replacing people with shitty technology doesn’t show up as a positive on the financials after you factor in the consequences of customer rage, that’s when the hot air gushes out of the bubble.

It might not take big chunks of InvestorWorld with it. But I’m betting it does.

L'enregistrement vidéo de la conférence La vérité sur la blockchain que j'ai donnée lors du festival Capitole du Libre 2023 le samedi 18 novembre a été mise en ligne sur YouTube :

La monnaie est une question centrale en économie, et donc en politique. Depuis quelques années, il est de plus en plus question de monnaies numériques. L'apparition de la cryptomonnaie bitcoin il y a un peu plus de dix ans a suscité l'intérêt de nombreuses personnes et institutions, jusqu'aux banques centrales des grandes puissances économiques. Il est donc temps de s'y intéresser de plus prêt.

- AL de septembre est en kiosque ! / Numérique, MettreEnUne